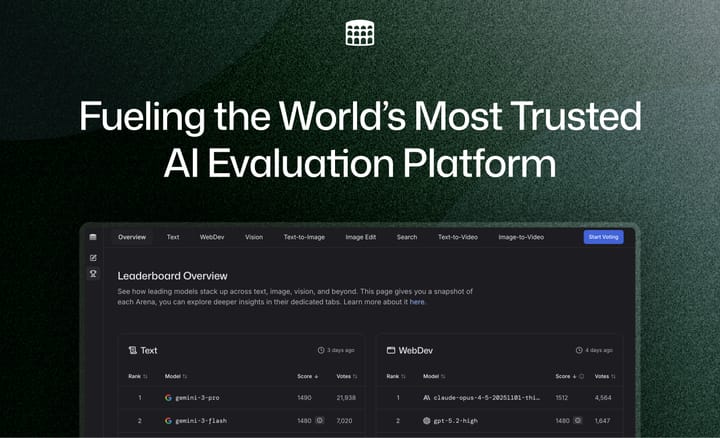

Fueling the World’s Most Trusted AI Evaluation Platform

We’re excited to share a major milestone in LMArena’s journey. We’ve raised $150M of Series A funding led by Felicis and UC Investments (University of California), with participation from Andreessen Horowitz, The House Fund, LDVP, Kleiner Perkins, Lightspeed Venture Partners and Laude Ventures.